I recently embarked on a fascinating journey through the world of web scraping, specifically targeting Fiverr's "Data Processing" category. The goal was to extract valuable gig information from the listing pages—a task that turned out to be both challenging and incredibly rewarding. Let me take you through the adventure, revealing not just the 'what' but the 'how' of scraping one of the most vibrant freelance marketplaces on the internet.

Venturing Into Fiverr with Scrapy and ScraperAPI

Fiverr, with its vast array of gigs and hustle-bustle, is a goldmine of data for anyone looking to analyze market trends or extract specific service information. However, its robust anti-bot mechanisms and heavily Javascript-rendered pages make it a tough nut to crack. My tool of choice for this mission was the scrapy framework, a powerhouse in the world of Python automation and web scraping. But even the mighty Scrapy needs a companion when facing a giant like Fiverr. That's where ScraperAPI comes into play.

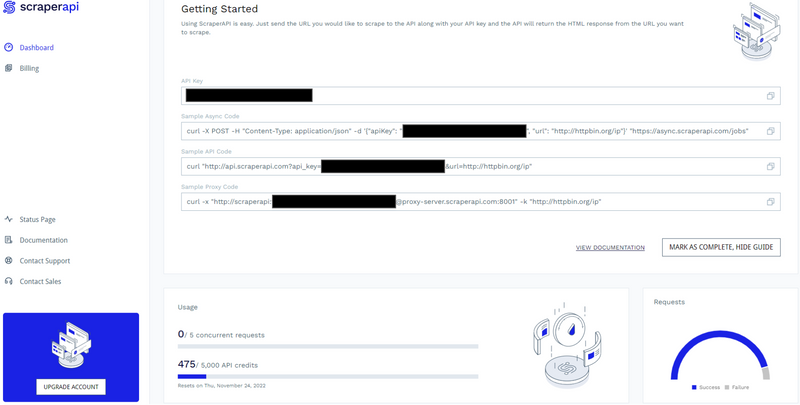

ScraperAPI: The Key to Fiverr's Gates

ScraperAPI is a proxy solution built to simplify web scraping at scale. It handles the heavy lifting of managing proxies, handling CAPTCHAs, and rendering Javascript. After signing up and obtaining my API key, I was set to integrate it within my Scrapy project. This integration enabled me to crawl through Fiverr’s defenses undetected, bypassing IP blocks and rendering the necessary Javascript to access the gig data I was after.

SCRAPER_API_KEY={INSERT_API_KEY_WITHOUT_THE_CURLY_BRACES}

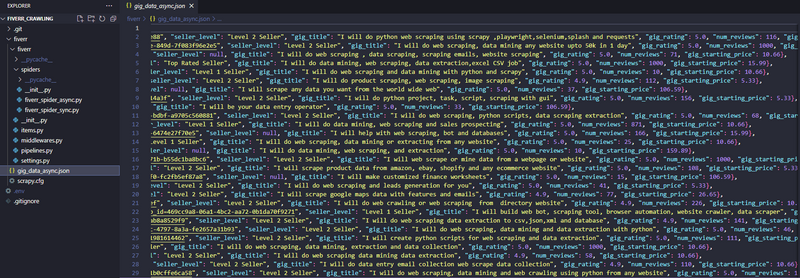

To launch the scraper, navigating to the fiverr folder and executing scrapy crawl SPIDER_NAME would kick things off. The result? A JSON file brimming with gig listings, including seller names, gig URLs, titles, ratings, and more.

Crafting the Spiders: Sync vs. Async

Creating not one but two spiders—fiverr_spider_sync and fiverr_spider_async—I explored both synchronous and asynchronous modes of crawling. While the former follows the traditional approach of waiting for each page to load and parse before moving on, the latter unleashes the power of concurrency, firing multiple requests simultaneously and thus, significantly speeding up the process.

# Synchronous pagination logic snippet

next_page = response.css("li.pagination-arrows > a::attr(href)").get()

if next_page and int(current_page) <= 10:

yield scrapy.Request(

next_page,

callback=self.parse,

)

Embracing the asynchronous approach, fiverr_spider_async, brought the advantage of speed and efficiency—a critical factor when scaling up the scraping operation.

Best Practices: Scrapy and ScraperAPI

Proper configuration is key to optimizing the scraping process. In my project, I made sure to tweak Scrapy’s settings to accommodate ScraperAPI’s features and ensure smooth operation. From adjusting retry times to managing download timeouts, every setting was calibrated for the best possible performance.

custom_settings_dict = {

# Custom settings for optimal performance

}

Navigating Challenges and Triumphs

The journey wasn’t without its hurdles. From initially underestimating Fiverr’s anti-bot safeguards to fine-tuning the async spider for maximum efficiency, each challenge was a learning opportunity. The result? A streamlined, effective tool capable of extracting a wealth of information from Fiverr’s bustling market.

In Conclusion

This adventure into the world of web scraping has been a testament to the power of Scrapy and ScraperAPI’s synergistic capabilities. Whether you’re a data enthusiast, market analyst, or a curious coder, the road to extracting rich data from dynamic platforms like Fiverr is now more accessible than ever. Feel free to dive into the GitHub repository for a closer look at the project, and perhaps, start your own scraping journey.

Should you have any questions or ideas you'd like to discuss, I'm always open for conversation. Let's connect on LinkedIn and explore the endless possibilities that web scraping brings to the table.

Top comments (0)